MiniWorld: A VizDoom Alternative for OpenAI Gym

VizDoom and DMLab are two 3D simulated environments commonly used in the reinforcement learning community. VizDoom is based on the original Doom game, and DMLab is based on the Quake 3 game engine. Recently, DeepMind has produced impressive results using DMLab, showing that neural networks trained end-to-end can learn to navigate 3D environments using visual inputs alone, and even execute simple language commands.

These simulated environments are popular and useful, but they can also be difficult to work with. VizDoom can be tricky get running on your system; there are unfortunately many dependencies, and if any of them fail to build and install, you’re going to have a bad time. Furthermore, both VizDoom and DMLab are fairly impractical to customize. The Doom and Quake game engines are written in (poorly commented) C code. Also, because VizDoom is based on a game from 1993, it stores its assets in a binary format which is fairly archaic (WAD files). DMLab is nice enough to provide a scripting layer which allows you to create custom environments without touching C code or using a map editor. However, this scripting layer is in Lua and is poorly documented.

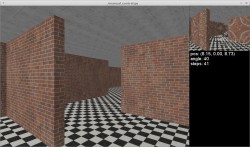

The 3D environments typically used in reinforcement learning experiments are typically fairly simple (e.g. Mazes, rooms connected by hallways, etc), and it seems obvious to me that the full Quake 3 game engine is overkill for what DeepMind has built with DMLab. As such, I set out to build MiniWorld, a minimalistic 3D engine for the purpose of building OpenAI Gym environments. MiniWorld is all written in Python and uses Pyglet (OpenGL) to produce 3D graphics. I wrote everything in Python because this language has become the “lingua franca” of the deep learning community, and I wanted MiniWorld to be easily modified and extended by students. Python is not known to be a fast language, but since the bulk of the work (3D graphics) is GPU-accelerated, MiniWorld can run at over 2000 frames per second on a desktop computer.

MiniWorld has trivial collision detection which prevents the agents from going through walls. It’s not physically accurate, and not appropriate for robotic arm control type of tasks. However, I believe it can be useful for navigation tasks where you want to procedurally generate a variety of simple environments. It can render indoor (and fake outdoor) environments made of rooms connected by hallways. The package is designed to make it easy to generate these environments procedurally using code, that is, you never have to produce map files. Because MiniWorld has been intentionally kept minimalistic, it has very few dependencies: Pyglet, NumPy and OpenAI Gym. This makes it easy to install and get working almost anywhere. This is an asset in the world of machine learning, where you may have to get experiments running on multiple compute clusters.

There are many use cases which MiniWorld cannot satisfy, but I believe that despite its simplicity, it can be a useful research tool. I’ve integrated domain randomization features which make it possible to do sim-to-real transfer experiments, similar to what I had previously implemented in the gym-duckietown environment. Domain randomization means randomly varying parameters of the environment to prevent a neural network from overfitting to the simulation, hopefully forcing the neural network to generalize to the real world. The videos below show a small robot trained to follow a red box in a simulated environment, and then tested in the real world.

If you are interested in using MiniWorld for your research, you can find the source code in this repository. Bug reports, contributions and feature requests welcome. If you feel that some specific feature would be particularly useful for your research, feel free to open an issue on GitHub. You can influence the course of this project.